About Me

My name is Xiaodi Yuan (袁小迪), and I also go by Ada.

I am a third-year PhD candidate at UC San Diego, advised by Prof. Hao Su.

I received my B.Eng. degree from IIIS ("Yao Class"), Tsinghua University, where I worked with Prof. Li Yi on 3D shape reconstruction, and Prof. Tao Du on physics-based simulation.

My research interests are computer graphics and physics-based simulation. I am working on building fast and realistic simulation environments for embodied AI.

News

- [Jan 5, 2026] 😎 I joined Amazon Frontier AI & Robotics (FAR) as a research intern working with Prof. Guanya Shi!

Publications [More]

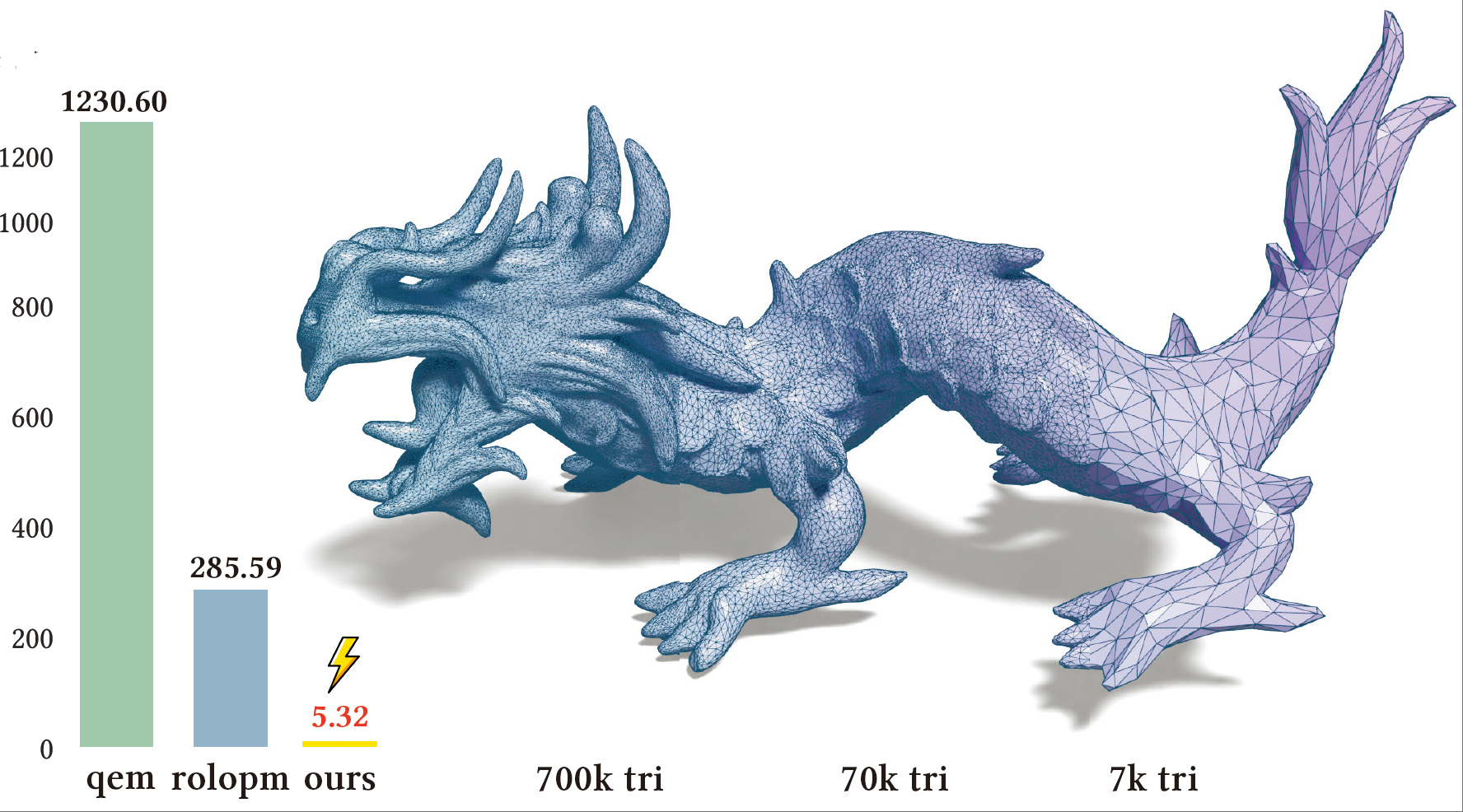

PaMO: Parallel Mesh Optimization for Intersection-Free Low-Poly Modeling on the GPU

PG 2025 (Journal Track) [PDF] [Project] [Code]

We address the challenges of existing mesh simplification methods that cause self-intersections and surface distortions. Our GPU-based approach performs parallel re-meshing, intersection-free simplification, and safe projection to produce clean, geometry-preserving meshes suitable for efficient rendering, editing, and simulation.

C⁵D: Sequential Continuous Convex Collision Detection Using Cone Casting

SIGGRAPH 2025 (Journal Track) [PDF] [Code]

Continuous Collision Detection (CCD) in IPC-based simulations is slow and often requires powerful GPUs. We introduce a sequential CCD algorithm for convex shapes with affine trajectories (as in ABD) that achieves a 10x speed-up over traditional primitive-level CCD. Our method uses cone casting, a generalization to the ray-casting CCD used in traditional physics engines for rigid bodies.

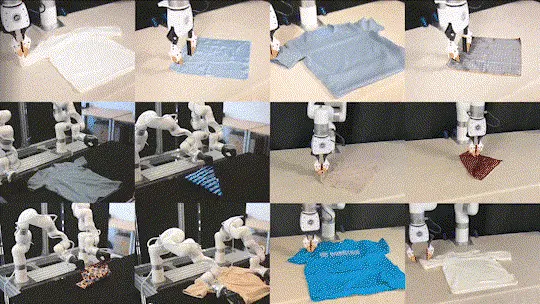

Diffusion Dynamics Models with Generative State Estimation for Cloth Manipulation

Manipulating deformable objects like cloth is difficult due to their complex dynamics and tricky state estimation. We propose a generative, transformer-based diffusion model that handles both perception and dynamics. Our method reconstructs the full cloth state from sparse observations and predicts future movement, reducing long-horizon prediction errors by an order of magnitude compared to previous methods. This framework successfully enabled a real robot to perform complex cloth folding tasks.

LodeStar: Long-horizon Dexterity via Synthetic Data Augmentation from Human Demonstrations

We propose a learning framework and system that automatically decomposes task demonstrations into semantically meaningful skills using off-the-shelf foundation models, and generates diverse synthetic demonstration datasets from a few human demos through reinforcement learning. These sim-augmented datasets enable robust skill training, with a Skill Routing Transformer (SRT) policy effectively chaining the learned skills together to execute complex long-horizon manipulation tasks.

General-Purpose Sim2Real Protocol for Learning Contact-Rich Manipulation With Marker-Based Visuotactile Sensors

TRO 2024 [PDF]

We build a general-purpose Sim2Real protocol for manipulation policy learning with marker-based visuotactile sensors. To improve the simulation fidelity, we employ an FEM-based physics simulator that can simulate the sensor deformation accurately and stably for arbitrary geometries. We further propose a novel tactile feature extraction network that directly processes the set of pixel coordinates of tactile sensor markers and a self-supervised pre-training strategy to improve the efficiency and generalizability of RL policies.

Projects [More]

SAPIEN SPH: A Simple GPU-based Implementation of PCISPH

Homework project for the course “Physical Simulation” at UCSD instructed by Prof. Albert Chern.

ManiSkill-ViTac: Vision-based-Tactile Manipulation Skill Learning Challenge 2024

ICRA 2024 Workshop [Project]

The ManiSkill-ViTac Challenge aims to provide a standardized benchmarking platform for evaluating the performance of vision-based-tactile manipulation skill learning in real-world robot applications. The challenge is supported by the GPU-based IPC simulator I developed.