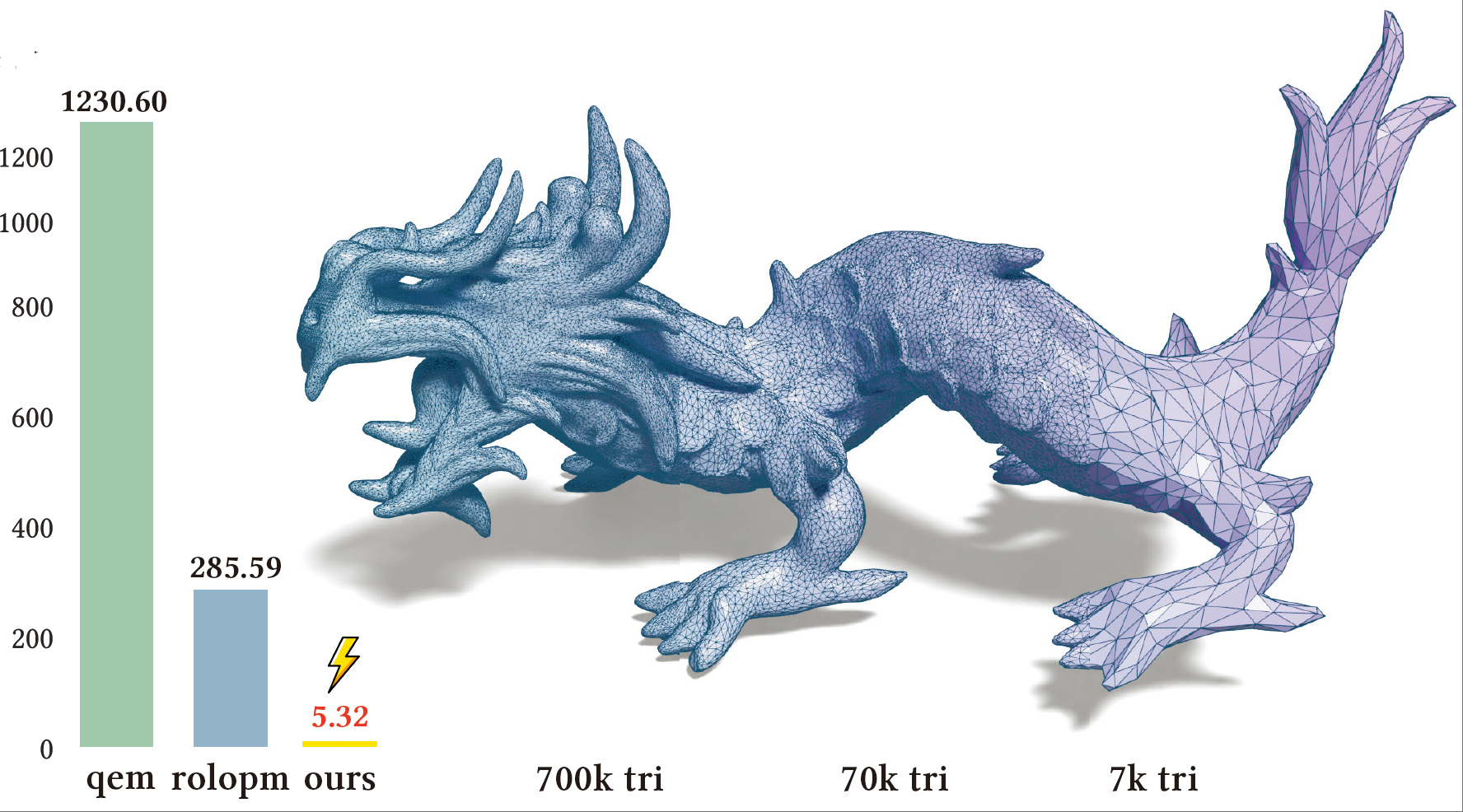

PaMO: Parallel Mesh Optimization for Intersection-Free Low-Poly Modeling on the GPU

PG 2025 (Journal Track) [PDF] [Project] [Code]

We address the challenges of existing mesh simplification methods that cause self-intersections and surface distortions. Our GPU-based approach performs parallel re-meshing, intersection-free simplification, and safe projection to produce clean, geometry-preserving meshes suitable for efficient rendering, editing, and simulation.